Globally Stable Neural Imitation Policies

May 10, 2024· ,,·

1 min read

,,·

1 min read

Amin Abyaneh

Mariana Sosa

Hsiu-Chin Lin

Image credit: kinovarobotics.com

Image credit: kinovarobotics.comAbstract

Imitation learning presents an effective approach to alleviate the resource-intensive and time-consuming nature of policy learning from scratch in the solution space. Even though the resulting policy can mimic expert demonstrations reliably, it often lacks predictability in unexplored regions of the state-space, giving rise to significant safety concerns in the face of perturbations. To address these challenges, we introduce the Stable Neural Dynamical System (SNDS), an imitation learning regime which produces a policy with formal stability guarantees. We deploy a neural policy architecture that facilitates the representation of stability based on Lyapunov theorem, and jointly train the policy and its corresponding Lyapunov candidate to ensure global stability. We validate our approach by conducting extensive experiments in simulation and successfully deploying the trained policies on a real-world manipulator arm. The experimental results demonstrate that our method overcomes the instability, accuracy, and computational intensity problems associated with previous imitation learning methods, making our method a promising solution for stable policy learning in complex planning scenarios.

Type

Publication

In IEEE International Conference on Robotic and Automation, 2024

Design overview

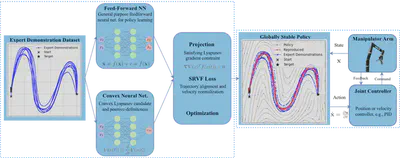

Our approach uses a neural policy architecture based on the Lyapunov theorem to provide formal stability guarantees, using projection at each step, and a cost function tailored to yield reliable policy rollouts by adding both a state and action term in the overall loss function.

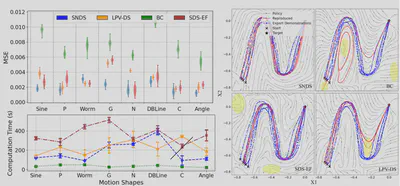

Summary of results

Through extensive simulations and real-world tests on a manipulator arm, we demonstrated that SNDS addresses instability, accuracy, and computational challenges better than previous imitation learning methods, making it a promising solution for stable policy learning in complex scenarios.