Safe RL Policy Optimization

[Ongoing Project] Learning model-free reinforcement learning policies with internal safety and stability guarantees mainly for manipulation and locomotion tasks. The experiments leverage domain randomization and sim-to-real capabilities of Isaac Sim and Isaac Lab simulators. The project is in collaboration with Mitacs, funded by the Mitacs Accelerate Fellowship and an industrial partner, Sycodal Electronics Inc.

Feb 24, 2024

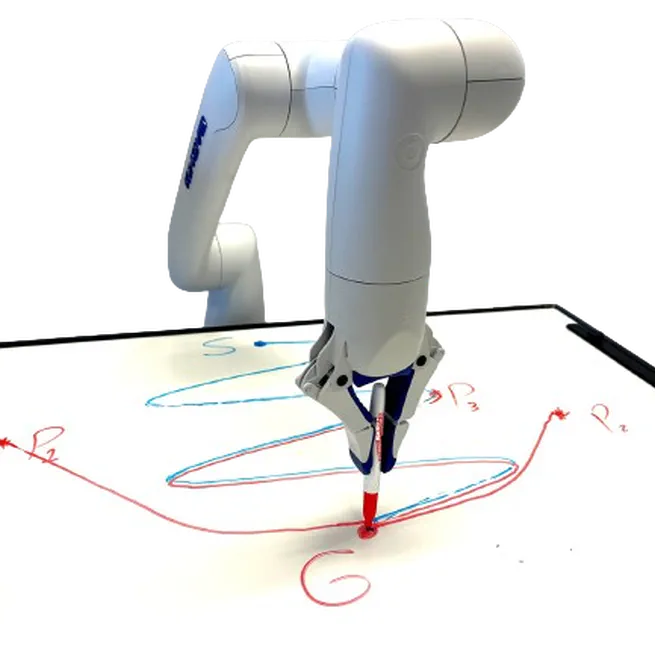

Lyapunov-Stable Polynomial Imitation Policies

Learning polynomial imitation policies with guaranteed stability and out of distribution recovery.

Oct 26, 2023