We introduce a class of contractive imitation policies with theoretical guarantees and out-of-sample error bounds for robot learning.

Aug 22, 2024

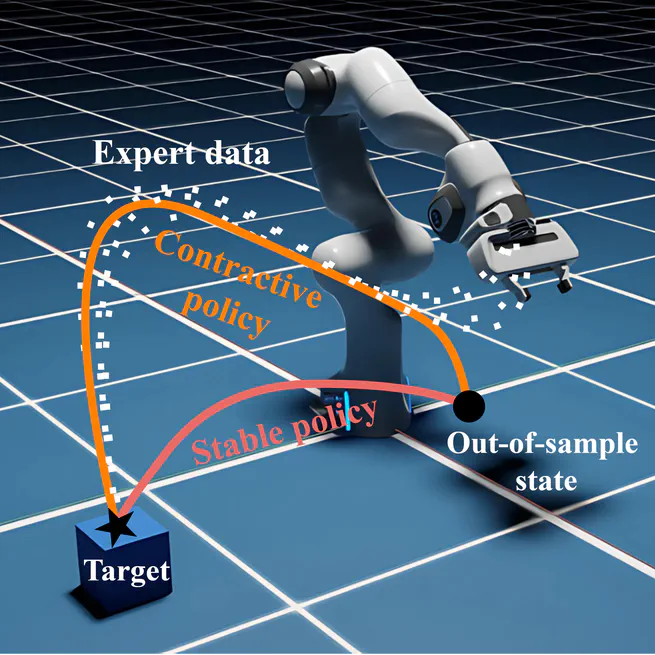

In this project, we developed the Stable Neural Dynamical System (SNDS) to improve imitation policies by ensuring stability of the trained policy. Our approach uses a neural policy architecture based on the Lyapunov theorem to provide formal stability guarantees. We jointly train the policy and a Lyapunov candidate to ensure global stability.

May 10, 2024

[Ongoing Project] Learning unconstrained and stable imitation policies from state-only expert demonstrations applicable to a variety of robotic platforms. Experiments and simulations are entirely conducted in Nvidia Isaac Lab and Isaac Gym. The project is funded by a competitive scholarship from the Swiss National Centres of Competence in Research (NCCR Automation) in collaboration with EPFL.

Apr 28, 2024

[Ongoing Project] Learning model-free reinforcement learning policies with internal safety and stability guarantees mainly for manipulation and locomotion tasks. The experiments leverage domain randomization and sim-to-real capabilities of Isaac Sim and Isaac Lab simulators. The project is in collaboration with Mitacs, funded by the Mitacs Accelerate Fellowship and an industrial partner, Sycodal Electronics Inc.

Feb 24, 2024

Learning globally stable neural imitation policies (SNDS).

Oct 26, 2023

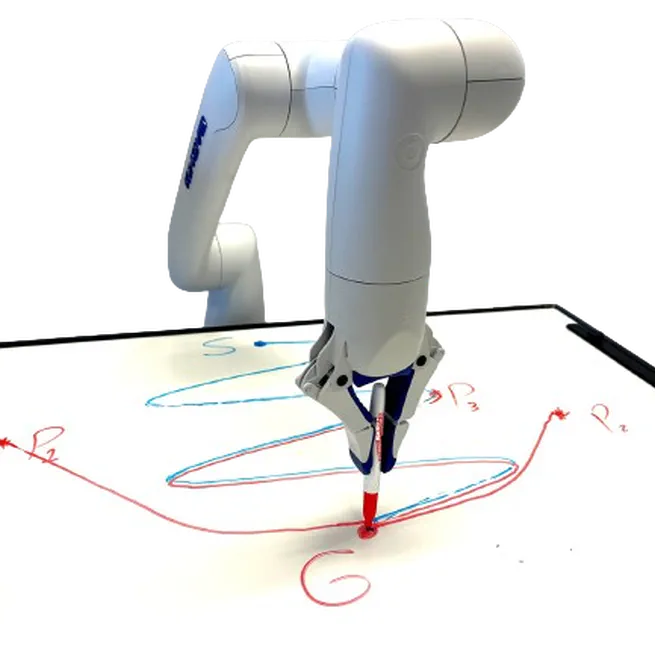

Learning polynomial imitation policies with guaranteed stability and out of distribution recovery.

Oct 26, 2023

We present an approach for learning policies represented by globally stable nonlinear dynamical systems. We model the nonlinear dynamical system as a parametric polynomial and learn the polynomial's coefficients jointly with a learnable Lyapunov candidate to guarantee stability and predictability of the policy.

Oct 12, 2023